When it comes to safety, we hold machines and humans to very different standards. In 2016, there were 34,439 fatal road crashes in the US, but we regard driving alongside other humans as ‘safe enough’. Accidents are tragic, but they happen.

However, if roads were fully automated and there were 30,000 fatal accidents, would we be so understanding? Would we still call them ‘safe enough’ – and what does that mean?

To get a better understanding, we spoke to Chris Bessette, program manager for autonomous driving at Draper, and one of the world’s foremost experts on autonomy and LIDAR.

Chris Bessette

Program manager for autonomous driving at Draper. Image credit: Draper

Draper is an engineering research laboratory that was originally part of MIT, but span off in the 1970s. It’s best known for its work in aerospace and undersea vehicles, but in the last few years it’s also begun working on safety for self-driving cars.

Autonomous cars might seem like a strange move for Draper, but as Bessette explains, it does make sense when you consider the lab’s heritage.

“We’ve been working in lots of different areas where you have to build smarts into the platforms,” he says. “You can’t have a remote operator for a lot of the different projects that we work on, so we’ve been building the autonomic capability for decades. We’re able to leverage that in-depth knowledge that we have to help develop an autonomous, self-driving car.

“The other piece of it [is that] Draper understands what it’s like to develop a system that’s safety-critical,” Bessette adds. “Whether it be a missile, for example, or an underwater drone, the application spaces we work in demand perfection. So those thoughts really translate directly to self-driving cars.”

Safety issues

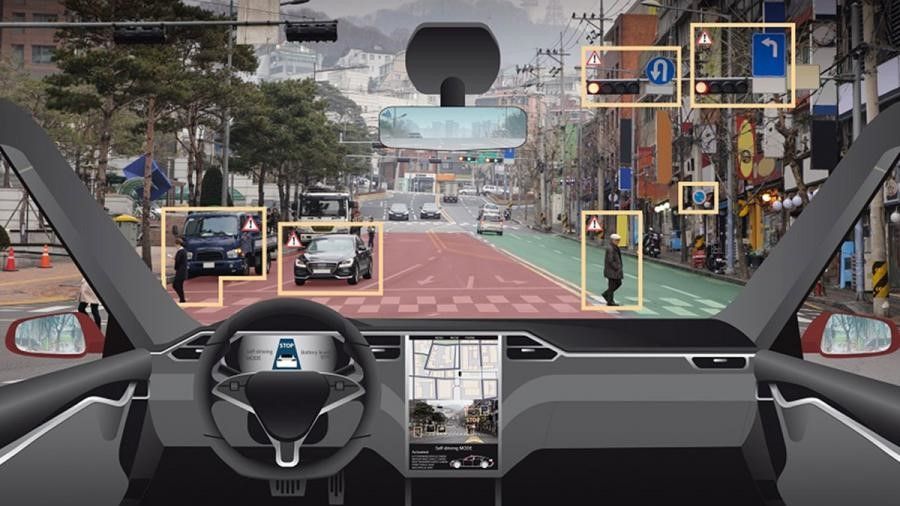

Bessette says the main safety issue for autonomous cars is perception. He splits this into two pieces: the sensors themselves, and the software. You can have the best algorithms in the world, but if the imagery from the car’s sensors is poor quality, there’s no point.

“One thing I think a lot of us take for granted is that the human eye is fantastic,” he says. “It’s so much better than any automotive-grade sensors that we have today. So the first challenge is actually getting the sensors – the cameras, the lidar, the radar etc – getting those sensors so they are closer to being on par, if not totally on par with the quality of the human eye.”

Then, once you have that high quality imagery, you need to use it to make decisions – identifying cars, people and other constraints. “That’s the other major challenge,” says Bessette, “and I think there’s a lot more work that needs to go into that.”

Draper is developing a solid state, microelectromechanical-based lidar that fits all components except the optics on a single chip. Image credit: Draper

(Image: © Draper)

For example, neural networks can be trained to recognize certain patterns, but those patterns can be fooled.

“So there was an experiment where someone took a standard fire hydrant that we see all over the roads today, and they painted it to look like the Nintendo character Mario. They painted it to look like that, and it tricked all of the nets. They didn’t know that was a fire hydrant anymore.”

That’s a pretty extreme example, but it highlights how brittle the current state of the art really is. It works well enough under optimal conditions, but real world driving conditions can be downright hostile.

Defining ‘safe enough’

Bessette says the question of ‘safe enough’ isn’t being discussed enough, but it’s something that Draper is giving a lot of thought. At the moment, Bessette says, the industry is too fragmented. To establish a standard, we need better co-ordination between local, state and federal governments (or their equivalents in other countries) and car designers.

“We have a lot of OEMs, for example, that are doing what they think is the right approach, but all the OEMs are designing to different requirements for what is ‘good enough’,” he says. “So I think that we need to put more co-ordination there.”

He draws a comparison with the Federal Airline Administration (FAA) – the body that regulates aviation in the US: “If the FAA wanted a new safety measure to be built into the airlines, the airlines pretty much have to fall in line in being compliant with that requirement. And there’s not really an analog that has similar regulatory authority for self-driving cars.

“I think until those authorities start to form and start to work with the car manufacturers to work out how you define ‘safe enough’, we have to figure out what that common definition is. And I think it’s going to be difficult because everyone’s designing to something a little bit different.”

Building trust

Automakers including Ford and Jaguar Land Rover are working on various ways to help human road users feel comfortable sharing the streets with autonomous vehicles. JLR has conducted experiments on simulated roads with cars that make eye contact and beam their planned routes onto the road, while Ford is testing pedestrians’ reactions to a mock autonomous car fitted with an extensive system of light indicators.

Ford tested pedestrians’ reactions to a ‘driverless car’ operated by a driver disguised as a seat. Image credit: Ford

(Image: © Ford)

In both cases, the carmakers are trying to make the vehicles’ behaviour predictable – something Bessette agrees is essential for people to feel comfortable living and working alongside machines. He cites an example from Draper’s work with another client, where humans worked in ‘teams’ with robots – each playing to their own strengths.

When the robot’s behaviour was predictable, the human-robot team was stronger than the sum of its parts. However, doing something unexpected rapidly eroded the human teammate’s trust.

“I think predictability is absolutely key,” Bessette says. “If people see self-driving cars that are driving more how humans would, and they can say ‘Oh, that car’s taking a right-hand turn like I would,” that helps them almost forget that that’s a computer driving the car as opposed to a human.”

Preparing for the future

Autonomous cars are already being trialled in parts of the US (including Boston, where Draper is based), and the UK government has announced plans to begin testing driverless vehicles in Britain this year. However, we’re still a long way from trusting them outside tightly controlled conditions.

“For example, if a car manufacturer wants to do testing here in Boston, they have to come and explain what their plan is why they want to test there,” says Bessette. “They have to effectively apply for a license. Then, if that license is granted, there are some restrictions on when they can and cannot operate for some amount of time. If results show that they’re operating safely and they meet certain criteria, they can then test in a broader set of environments, whether it’s in less-than-ideal environmental conditions like rain or snow. So the reins are relaxed a little bit.”

Self-driving cars on public streets also have safety drivers, who scan the environment for dangers as they would if they were operating the car themselves, but also have a display (often a laptop) that lets them see what the vehicle is planning next. If its course of action looks dangerous, they can intervene and take manual control.

Self-driving cars can be designed to navigate safely without GPS with the help of the new Draper APEX Gyroscope. Image credit: Draper

(Image: © Draper)

There are companies planning to roll out fleets of totally autonomous taxis. Bessette says this transition period will be one of the biggest challenges for self-driving cars, when we have a mixture of human-driven and autonomous cars sharing the same roads. He doesn’t advocate banning human drivers from the roads when this begins, but hypothesizes that it would make things easier.

“In this transition period we’re going to have a period of lots of human-driven cars on the road and some self-driving cars, and then over time the self-driving car population will increase the human-driven car population will decrease,” he says.

“Interaction between self-driving cars and human-driven cars is going to be interesting because self-driving cars are actually programmed to be to be predictable – this comes back to trust – but they’re also programmed to be very conservative probably much more conservative than your typical human driver so how this whole thing plays out will be an interesting dynamic.”

Be the first to comment