The strange, creepy world of “deepfakes,” videos (often explicit) with the faces of the subjects replaced by those of celebrities, set off alarm bells just about everywhere early this year. And in case you thought that sort of thing had gone away because people found it unethical or unconvincing, the practice is back with the highly convincing “Deep Video Portraits,” which refines and improves the technique.

To be clear, I don’t want to conflate this interesting research with the loathsome practice of putting celebrity faces on adult film star bodies. They’re also totally different implementations of deep learning-based image manipulation. But this application of technology is clearly here to stay and it’s only going to get better — so we had best keep pace with it so we don’t get taken by surprise.

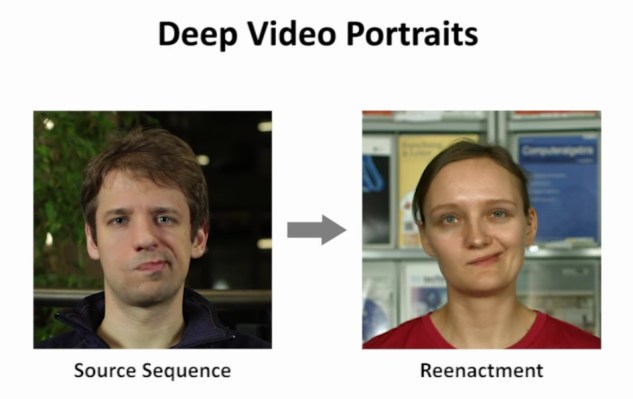

Deep Video Portraits is the title of a paper submitted for consideration this August at SIGGRAPH; it describes an improved technique for reproducing the motions, facial expressions, and speech movements of one person using the face of another. Here’s a mild example:

What’s special about this technique is how comprehensive it is. It uses a video of a target person, in this case President Obama, to get a handle on what constitutes the face, eyebrows, corners of the mouth, background, and so on, and how they move normally.

Then, by carefully tracking those same landmarks on a source video it can make the necessary distortions to the President’s face, using their own motions and expressions as sources for that visual information.

So not only does the body and face move like the source video, but every little nuance of expression is captured and reproduced using the target person’s own expressions! If you look closely, even the shadows behind the person (if present) are accurate.

The researchers verified the effectiveness of this by comparing video of a person actually saying something on video with what the deep learning network produced using that same video as a source. “Our results are nearly indistinguishable from the real video,” says one of the researchers. And it’s true.

So, while you could use this to make video of anyone who’s appeared on camera appear to say whatever you want them to say — in your voice, it should be mentioned — there are practical applications as well. The video shows how dubbing a voice for a movie or show could be improved by syncing the character’s expression properly with the voice actor.

There’s no way to make a person do something or make an expression that’s too far from what they do on camera, though. For instance, the system can’t synthesize a big grin if the person is looking sour the whole time (though it might try and fail hilariously). And naturally there are all kinds of little bugs and artifacts. So for now the hijinks are limited.

But as you can see from the comparison with previous attempts at doing this, the science is advancing at a rapid pace. The differences between last year’s models and this years are clearly noticeable, and 2019’s will be more advanced still. I told you all this would happen back when that viral video of the eagle picking up the kid was making the rounds.

“I’m aware of the ethical implications,” coauthor Justus Theis told The Register. “That is also a reason why we published our results. I think it is important that the people get to know the possibilities of manipulation techniques.”

If you’ve ever thought about starting a video forensics company, now might be the time. Perhaps a deep learning system to detect deep learning-based image manipulation is just the ticket.

The paper describing Deep Video Portraits, from researchers at Technicolor, Stanford, the University of Bath, the Max Planck Institute for Informatics, and the Technical University of Munich, is available for you to read here on Arxiv.

Be the first to comment